How to use AI privately at Brave browser (2 methods)

Introduction

Brave Browser just released the version 1.69, which enables users to use their own AI model at Brave Browser. In my opinion, this is absolutely HUGE news, as having an AI accessible can help you quite a lot. In this guide, I will show you two methods that you can use this new Brave Browser to improve your browsing experience and your privacy.

Method 1 (More private)

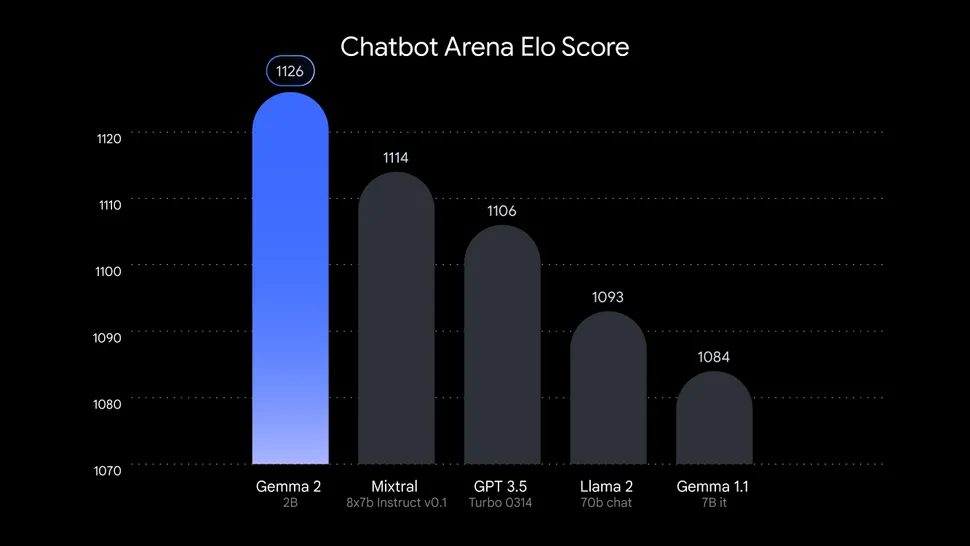

If you want maximum privacy, you can run a local model. I'll show the most lightweight (and yet good model) that I've found so far. You can run it without a GPU or a strong pc. You can run it even with 4GB of ram if you don't have too many open processes on your PC. Gemma 2 2B is what we are talking about here:

(Image credit: Google DeepMind)

This very lightweight image does incredibly well if you think about how lightweight it is.

Download Ollama and Gemma 2 2B

The first step for this journey is downloading Ollama. You can find their official site here and follow the installation steps for your operational system. Once you have ollama set, you can just pull the model that you want. In our case, we will be using Gemma 2 2B:

For Docker

docker exec -it ollama ollama pull gemma2:2b

For a normal installation

ollama pull gemma2:2b

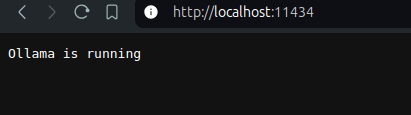

Once you have the model downloaded, you can test if your installation is working by accessing http://localhost:11434/. If you see the following message, you are good to go:

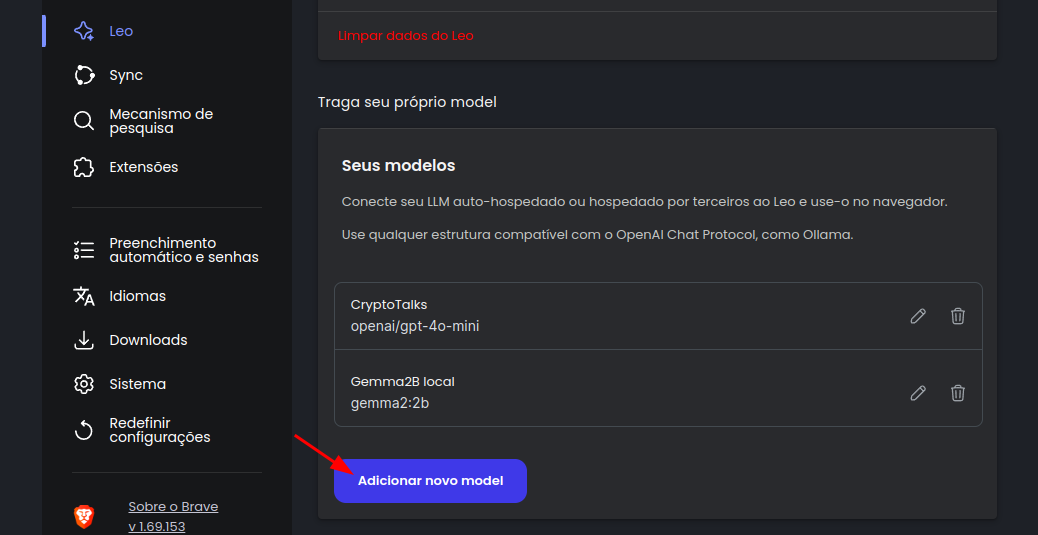

Brave setup for Gemma2

At Brave Browser, go to Settings and click on "Leo". Once you are inside Leo, click on Add new model

For the new model settings, use the following:

****Label:****Anything that you want, it will be the name visible on the browser for you

Model Request Name: Use exactly the model name. You can use Ollama github to find it. In our case, it is gemma2:2b

Server Endpoint: http://localhost:11434/v1/chat/completions

API Key: Leave it empty.

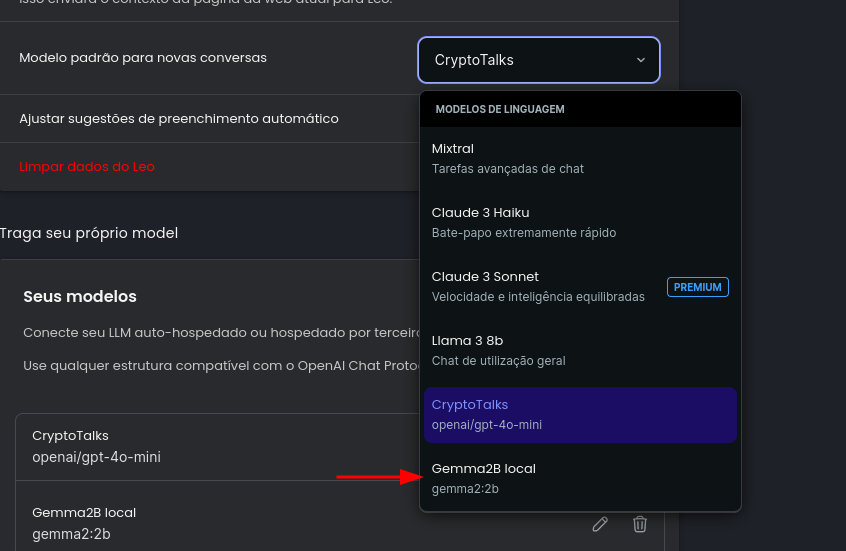

Once you have that set, your model is good to go! I also recommend you to set your model as default on the same page by changing the default model for chats:

Method 2 (less private, but smarter)

If you are fine with losing a bit of privacy, Cryptotalks.ai is an excellent choice for you. It's basically a site that provides you an API to use nearly any AI model that you want. That includes Gemini, openAI models, llama and much more.

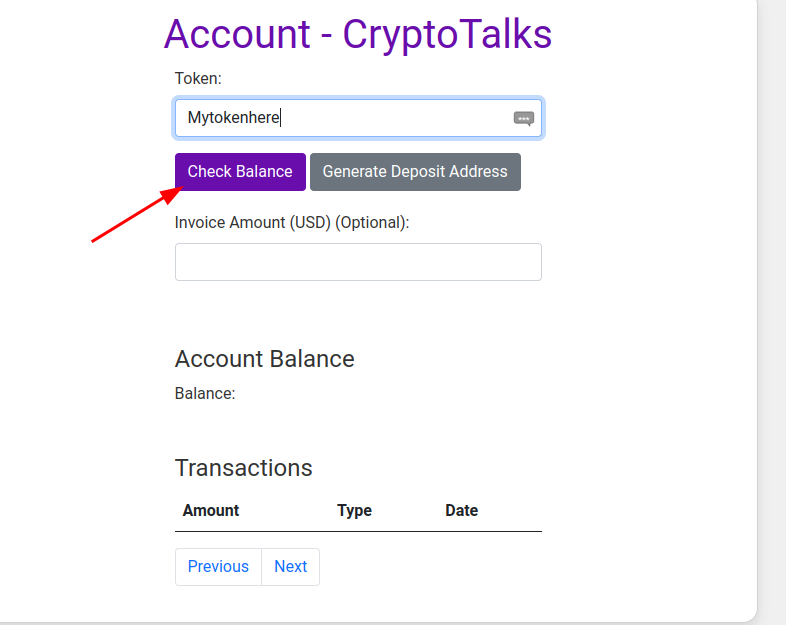

Getting an account at CryptoTalks

First thing, go to https://cryptotalks.ai/signup and create an account. Once it is done, you will receive a token. That is your API TOKEN, so don't lose it.

Finally, you can just go to your account page, insert your token and then make a deposit:

You can deposit with Bitcoin/Bitcoin Lightning Network. Once your deposit is detected, your account is set and you can proceed.

Brave setup for Cryptotalks

The setup is very similar, except that you will change the data:

****Label:****Anything that you want, it will be the name visible on the browser for you

Model Request Name: Use exactly the model name. You can use the docs to choose a model. Some example names are:

- openai/chatgpt-4o-latest

- openai/gpt-4o-mini

- anthropic/claude-3.5-sonnet

- google/gemini-flash-1.5

Server Endpoint: https://cryptotalks.ai/v1/chat/completions/

API Key: Use your token.

If you want to check a comparison of the current model's performance, I recommend checking this site.

Tests and conclusion

With Brave Leo and your own GPT, you can do things so much faster. You can check the summary of a ted talks for example:

Gemma2 2B summary

And if I'm using my laptop with battery and don't want to use more energy, I can just use my cryptotalks setup with the model that I want without using an identifiable account.

If you enjoyed this article, you can share it with your friends or subscribe to The Self Hosting Art. Thank you for reading :)

You can also help with XMR(Monero):

8AWKRGyqQ6fdaLwGVAdVTbEP6ZttSXwcYWQWy7gnq6zceTngtJgaAr82Hxr2FY5bkCUJVerccH9XNFX1qWnZxuGYTU5bJ34