Introduction

If you don't have a powerful GPU, but you still want to use AI to help you in your daily tasks, Open Web UI is the solution. You can use different API's and different models and pay per request/prompt.

Installing Docker

The first step here is installing docker. If you are using Ubuntu, you can easily follow our guide to set it. Once docker is set, you can proceed to next step.

Installing Open WebUI

To install Open WebUI, you can use the following command:

docker run -d -p 3848:8080 -e ENABLE_ADMIN_CHAT_ACCESS=false --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:latestYou can customize the command to use the ports that you want. Remember that you can change the port 3848 to whatever you want, but do not change the port 8080 as that is the port that will be running internally from Docker, and it's required by the application.

Now the the application is running, you can serve it using a webserver. I'll show a example using Caddy and nginx, but you can choose any.

caddy

yourdomainhere.com {

encode zstd gzip

reverse_proxy 127.0.0.1:3848

}

nginx

server {

listen *:80;

server_name yourdomain.com;

error_log /var/log/nginx/ollama.example.com-80_error.log;

access_log /var/log/nginx/ollama.example.com-80_access.log main;

add_header Strict-Transport-Security 'max-age=31536000' always;

add_header X-Frame-Options 'deny' always;

add_header X-Content-Type-Options 'nosniff' always;

add_header X-XSS-Protection '1; mode=block' always;

return 301 https://$server_name$request_uri;

}

server {

listen *:443 ssl;

server_name yourdomain.com;

error_log /var/log/nginx/ollama.example.com-443_error.log;

access_log /var/log/nginx/ollama.example.com-443_access.log main_ssl;

add_header Strict-Transport-Security 'max-age=31536000' always;

add_header X-Frame-Options 'deny' always;

add_header X-Content-Type-Options 'nosniff' always;

add_header X-XSS-Protection '1; mode=block' always;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_prefer_server_ciphers on;

ssl_ciphers 'AES256+EECDH:AES256+EDH';

ssl_ecdh_curve 'secp384r1';

ssl_dhparam '/etc/nginx/dhparam.pem';

ssl_certificate '/etc/ssl/example/ollama.example.com.crt';

ssl_certificate_key '/etc/ssl/example/ollama.example.com.pem';

location / {

proxy_pass http://localhost:3848;

proxy_http_version 1.1;

proxy_redirect off;

proxy_set_header Connection "Upgrade";

proxy_set_header Host $host;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $http_host;

proxy_set_header X-Forwarded-Port 443;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Real-IP $remote_addr;

chunked_transfer_encoding off;

proxy_buffering off;

proxy_connect_timeout 3600s;

proxy_read_timeout 3600s;

proxy_send_timeout 3600s;

send_timeout 3600s;

client_max_body_size 0;

}

}Connecting an API service

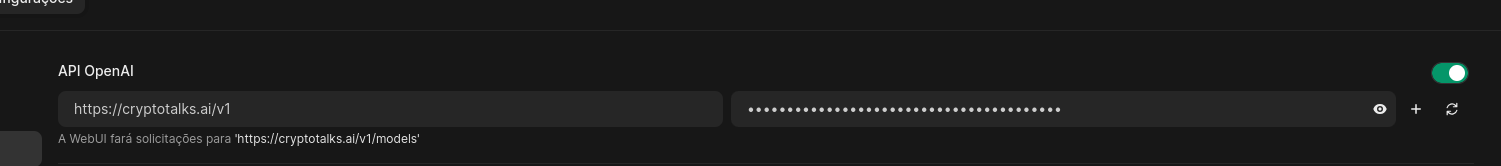

You can use the official openAI api or use a different provider. In my case, I am using Cryptotalks.ai

Once you have an account there and you have your API token, you can set it at https://yourdomain.com/admin/settings

You can turn on the openAI API and use the following settings:

https://cryptotalks.ai/v1Furthermore, make sure to turn off the ollama API, unless you want to locally run AI. But if that is what you want, your VPS will need to have lots of ram, at least 8GB I would say, and it would be very slow unless you have a VPS with gpu.

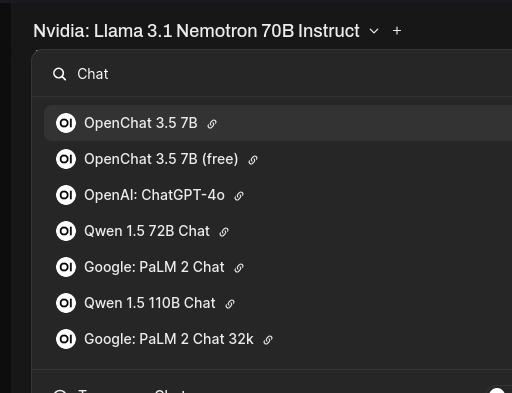

Now you can decide whatever API you want:

Conclusion

That is it! I hope you enjoy the guide. If you need any clarification, you can comment and I'll help you.

If you enjoyed this article, you can share it with your friends or subscribe to The Self Hosting Art. Thank you for reading :)

You can also help with XMR(Monero):

8AWKRGyqQ6fdaLwGVAdVTbEP6ZttSXwcYWQWy7gnq6zceTngtJgaAr82Hxr2FY5bkCUJVerccH9XNFX1qWnZxuGYTU5bJ34